Function Calling

Function calling is the ability of large language models (LLMs) to invoke external functions or APIs in response to user queries. For example, if a user asks for the current weather in a specific location, the LLM can use function calling to call a weather API, fetch real-time data, and present it in a structured response.

This capability allows LLMs to enhance their functionality by integrating with other systems, services, or databases to provide real-time data, perform specific tasks, or trigger actions.

How Function Calling Works

- User Query: A user asks the LLM something that requires external data or specific action (e.g., “Check the weather in New York” or “Book an appointment”).

- Function Identification: The LLM identifies that the query requires a specific function to be called. For instance, if the user asks for the weather, the model recognizes that it needs to call a weather API rather than generate a general response.

- Parameter Extraction: The LLM analyzes the user’s query to extract the required parameters (e.g., location, date, or other variables). For example, in the weather query, “New York” would be extracted as the location parameter.

- Call the Function: The LLM triggers an external function or API with the appropriate parameters. This could involve fetching live data, performing a task (e.g., making a booking), or retrieving information that is outside the LLM’s static knowledge.

- Return the Result: The function returns the result (such as the current weather data), which the LLM incorporates into its response back to the user.

Configuring Function Calling

Below is an example of the Settings message with the agent.think configuration object that includes function calling capabilities. To see a complete example of the Settings message, see the Configure the Voice Agent documentation.

Client-Side Function Calling

If your function will run client-side and you do not need to make a request to a server, you will not need to use the endpoint object and do not need to provide the url, headers, or method fields.

In this example code below, the get_weather function gets triggered when someone asks the Agent about the weather in a particular place.

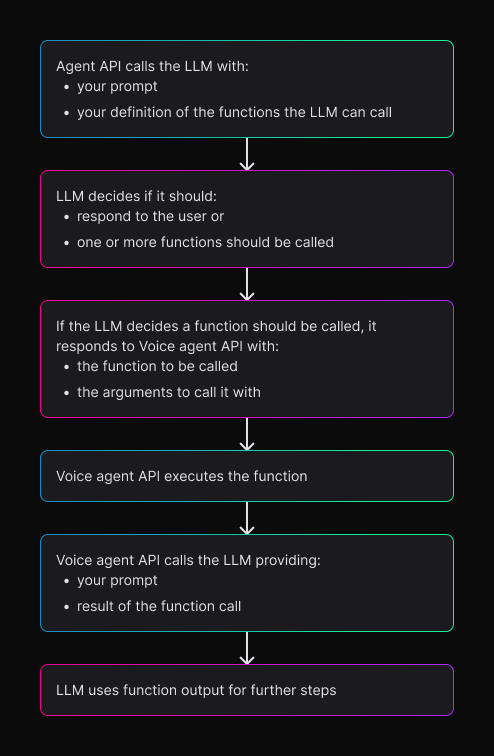

Function Calling Message Flow

2 types of Function calling messages are exchanged between the client and Deepgram’s Voice Agent API server through a websocket.

A FunctionCallRequest message is used to initiate function calls in your Voice Agent. This message can trigger either a server-side function execution or request a client-side function execution, depending on the client_side property setting.

A FunctionCallResponsecan be sent by the client or server. When sent from the client it is a response to a function call, but when sent from the server it is information about a function call that was requested by the agent.

Below is an example of a function call message flow based on the get_weather function example above.

- User submits a query → The agent determines a function is needed.

- Server sends a

FunctionCallRequest→ Requests function execution. - Client executes the function and sends a

FunctionCallResponse→ Returns the function result. - Server uses the response.

- The agent continues the conversation.

Function Call History

When resuming conversations or providing background context, you can include previous function call history in your agent configuration. This enables the agent to understand past interactions and maintain continuity across sessions.

For detailed information on including function call history, see the Function Call Context documentation.